When polling/reading a file in CSV format and the file doesn't have the expected number of columns, there are three scenarios that can happen when trying to translate this file into an XML using the Translate to Native Format activity in BPEL:

1) More commas in one of the lines than the number of columns at the header level

With adding extra commas, there is no parsing error to be handled and the file is processed. However, it causes the element right after it to be null, and for the rest of the values to be phased off by one, causing the last element to contain two or more values.

For example, with one extra comma:

With two extra commas:

2) Less commas in one of the lines than the number of columns at the header level

This gives the following error while reading the file:

Gives following error while polling:

Error while translating.

Translation exception.

Error occured while translating content from file /ftp_read/ftp_folder1/raw_data.csv

Please make sure that the file content conforms to the schema. Make necessary changes to the file content or the schema.

3) The header (if required) is missing from the file or the column names in the header are in a different order than as expected in the schema.

Solution:

To prevent BPEL from mis-translating these files due to an error in generating these files (and handle these errors more gracefully), you can create an XQuery file that will validate that the header exists (according to a predefined NXSD schema) and check that the number of commas in all of the rows matches that of the header (first row). This has to be done in XQuery since Oracle's XSLT 2.0 implementation doesn't support the replace and tokenize functions used here.

To check that the header exists:

declare function local:header_in_file($sourceInput as element() (:: schema-element(ns1:validationXMLRequest) ::),

$fileHeader as xs:string) {

let $header:=$fileHeader

(: remove anything that isn't a comma or letters/numbers :)

let $expectedHeader:=replace($header,'[^,A-Za-z0-9]','')

(: remove the last comma that was added from the transformation earlier :)

let $expectedHeader:=substring($expectedHeader,1,string-length($expectedHeader) - 1)

(: get the raw data :)

let $data:=tokenize(fn:data($sourceInput/ns1:PayLoad), '\n\r?')

(: obtain the first line from the incoming file, which is supposed to be the header :)

let $headerInFile:= $data[1]

(: remove anything that isn't a comma or letters/numbers :)

let $headerInFile:=replace($headerInFile,'[^,A-Za-z0-9]','')

let $result:=compare($headerInFile, $expectedHeader)

where $result !=0

return

<Error>The header in the file does not match the expected header.</Error>

};

To check that the number of commas matches in all the rows:

declare function local:func($sourceInput as element() (:: schema-element(ns1:validationXMLRequest) ::)) as element()* (:: schema-element(ns2:validationReport) ::) {

let $rawdata:=fn:data($sourceInput/ns1:PayLoad)

(: remove everything except commas, quotations (eg. "LastName, FirstName"), and newline:)

let $replace:=replace($rawdata,'[^,"\n\r]','')

(: remove instances of commas inside quotation marks (e.g. "LastName,FirstName") :)

let $commasOnly:=replace($replace,'"[,]*"','')

let $tokenized:=tokenize($commasOnly, '\n\r?')

let $header:=$tokenized[1]

let $total:=count($tokenized)

for $row at $pos in $tokenized

let $rowCommas:=string-length($row)

let $headerCommas:=string-length($header)

let $result:=compare($header,$row)

(:skipping the header and the last array which is an empty line :)

where $pos != 1 and $pos!= $total and $result != 0

return

<Error>

{concat('Row #',$pos, ' does not have the same number of commas as defined in the header. Header commas: ',$headerCommas, ' Row commas: ',$rowCommas)}

<Error>

};

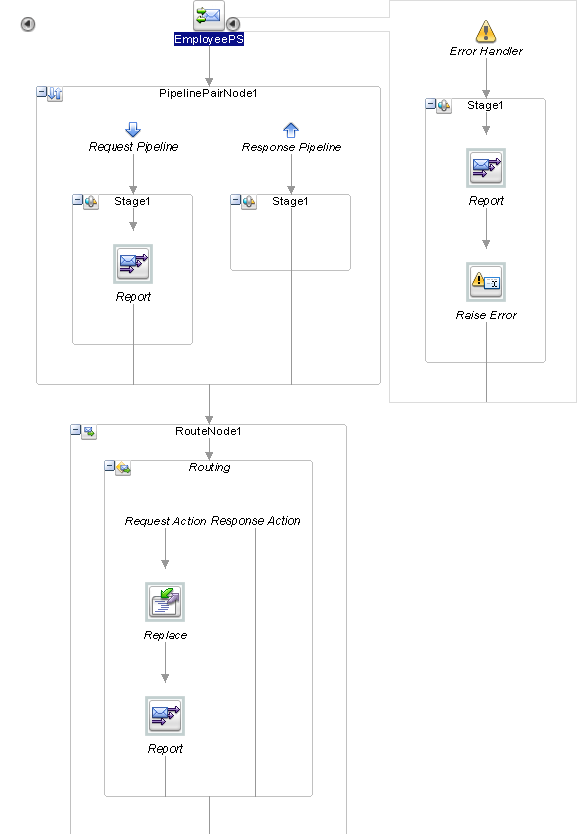

But how can you dynamically know the header of the file? Simple: using the NXSD schema, you populate a dummy variable based on the NXSD element:

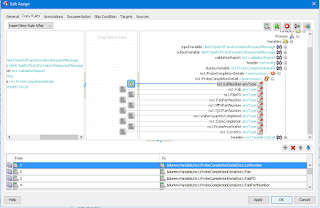

You then manually transform using the following XSLT to a string variable (in this case, $header):

<?xml version="1.0" encoding="windows-1252" ?>

<xsl:stylesheet version="2.0" xmlns:xsl="http://www.w3.org/1999/XSL/Transform"

xmlns:tns="http://www.test.com/soa/nxsdschema">

<xsl:output method="text"/>

<xsl:template match="/">

<!-- replace the select elements with the corresponding elements in NXSD schema -->

<xsl:for-each select="//tns:ProbeCompletionDetails/tns:ProbeCompletionDetail/*">

<xsl:value-of select="local-name()"/>,

</xsl:for-each>

</xsl:template>

</xsl:stylesheet>

This outputs in text form the elements of the NXSD schema (which are based on the header) separated by a comma.

And then do an assign activity like the following so that it gets the result as a string:

<assign name="Transform">

<copy><from>ora:processXSLT('Transformations/TransformGetHeader.xsl',$dummyVariable)</from>

<to>$header</to>

</copy>

</assign>

Note: The assign activity HAS to be done using this function and not any other variation (e.g. doXSLTransformForDoc etc.) since otherwise the text output from the XSLT will NOT be properly handled! Also in 12c, with the new folder structure, it is NOT necessary to add the two periods to access the Transformations folder (e.g. ../Transformations/TransformationFile.xsl). Doing so will cause for the XSLT file to not be recognized.

Note: The assign activity HAS to be done using this function and not any other variation (e.g. doXSLTransformForDoc etc.) since otherwise the text output from the XSLT will NOT be properly handled! Also in 12c, with the new folder structure, it is NOT necessary to add the two periods to access the Transformations folder (e.g. ../Transformations/TransformationFile.xsl). Doing so will cause for the XSLT file to not be recognized.

This $header variable is the one that is passed as an argument to the header_in_file function.

And...you're done! You've successfully [dynamically] validated your CSV file before translating it to XML, thus preventing ugly errors/mistranslations from occurring.