Problem: In an XQuery transformation file, an SQL query needs to be run to transform a date into the acceptable ISO date format to insert it into the database. Unlike the query-database function used in the SOA engine for XSLTs, the fn-bea:execute-sql returns the result enclosed in two XML elements. For example:

fn-bea:execute-sql('jdbc/DataSource',xs:QName('Element'),'select 123 result from DUAL')

Returns the value as such:

<Element>

<RESULT>123</RESULT>

</Element>

This isn't really helpful when you want to just get the result itself (without surrounded elements) to use it in an XQuery transformation.

Solution:

I had to create a local function that would only return the data (in the above example, return 123). The $input is the SQL query that needs to be run.

declare function local:stringToDate($input as xs:string){

let $value:=fn-bea:execute-sql('jdbc/DataSource',xs:QName('Element'),$input)

return fn:data($value/RESULT)

};

In this case, the SQL query I needed to run to transform the string date (e.g. 03/16/17 9:17 PM) into the ISO date format was:

select to_char(to_date('03/16/17 9:17 PM', 'MM/DD/YY HH:MI AM'),'YYYY-MM-DD"T"HH24:MI:SS') from dual;

And then I had call it as such:

<ns2:date>{local:stringToDate(fn:concat('select to_char(to_date(''',$inDate,''',''MM/DD/YY HH:MI AM''),''YYYY-MM-DD"T"HH24:MI:SS'') result from dual'))}</ns2:date>

Thursday, March 23, 2017

Thursday, March 2, 2017

Mediator - Instances stay in Running

This is a known bug where Mediator instances stay in running mode. Check the following Oracle Support Document for more information:

Oracle Support Document

Oracle Support Document

To switch from 'Deferred' to 'Immediate' auditing:

1. Go to EM

2. Expand 'SOA' on the left

3. Right click 'soa-infra'

4. Select 'SOA Administration' -> 'Common Properties'

5. At the bottom select 'More SOA Infra Advanced Configuration Properties...'

6. Select 'Audit Config'

7. Expand 'policies'

8. Here you'll see 'Element_0' and 'Element_1'

9. Expand 'Element_1' and change 'isActive' to 'false'

10. Expand 'Element_0' and change 'isActive' to 'true'

11. Click 'Apply'

Tuesday, February 28, 2017

OSB - Processing a SOAP inbound attachment

Problem:

A SOAP service returns a XOP/MOTM attachment as a zip file in its response. This must be unzipped to obtain the XML document inside and then write that file to a folder.

Solution:

There are a bunch of blogs already which describe how to retrieve a XOP/MOTM attachment from a web service response, but why not document my process as well.

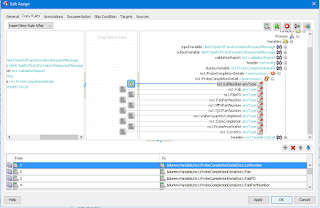

The Business Service must first be edited so that XOP/MOTM is enabled, which is disabled by default:

There are two options here as to how to handle the attachment. The difference is explained in this blogpost:

In my case I used Reference since I didn't really have to validate the XML or do anything else with it. This is how the web service would return its response:

So to get the binary-content element and pass it directly to the Java callout as a byte array, you have to select the whole binary-content element like so:

$body/typ:getDocumentsForFilePrefixResponse/*:result/*:Content/*:binary-content

The Java class which takes care of unzipping the file and returning the contents as String can be found in this blog post: An example of how to process a zipped file in OSB by Mike Muller. Putting it here as well for future reference:

To be able to write it to a file, all that's left is to call the encode method in the Java class, passing the result of the first Java callout to this method. This will transform the XML (as string) into base64 which will allow you to invoke the File adapter to write the file.

A SOAP service returns a XOP/MOTM attachment as a zip file in its response. This must be unzipped to obtain the XML document inside and then write that file to a folder.

Solution:

There are a bunch of blogs already which describe how to retrieve a XOP/MOTM attachment from a web service response, but why not document my process as well.

The Business Service must first be edited so that XOP/MOTM is enabled, which is disabled by default:

There are two options here as to how to handle the attachment. The difference is explained in this blogpost:

Include Binary Data by Reference: (Default) In an outbound response message, replace xop:Include elements with ctx:binary-content elements when setting up the $body message context variable.

Include Binary Data by Value: In an outbound response message, replace xop:Include elements with Base64-encoded text versions of corresponding binary data when setting up the $body message context variable.

In my case I used Reference since I didn't really have to validate the XML or do anything else with it. This is how the web service would return its response:

So to get the binary-content element and pass it directly to the Java callout as a byte array, you have to select the whole binary-content element like so:

$body/typ:getDocumentsForFilePrefixResponse/*:result/*:Content/*:binary-content

The Java class which takes care of unzipping the file and returning the contents as String can be found in this blog post: An example of how to process a zipped file in OSB by Mike Muller. Putting it here as well for future reference:

To be able to write it to a file, all that's left is to call the encode method in the Java class, passing the result of the first Java callout to this method. This will transform the XML (as string) into base64 which will allow you to invoke the File adapter to write the file.

Labels:

java,

motm,

motm attachment,

oracle service bus,

osb,

osb 12c,

soap,

soap attachments,

xop,

xop attachment

Monday, January 16, 2017

XQuery - Parsing/syntax validation of a CSV file (number of columns and expected header)

Problem:

When polling/reading a file in CSV format and the file doesn't have the expected number of columns, there are three scenarios that can happen when trying to translate this file into an XML using the Translate to Native Format activity in BPEL:

1) More commas in one of the lines than the number of columns at the header level

With adding extra commas, there is no parsing error to be handled and the file is processed. However, it causes the element right after it to be null, and for the rest of the values to be phased off by one, causing the last element to contain two or more values.

2) Less commas in one of the lines than the number of columns at the header level

When polling/reading a file in CSV format and the file doesn't have the expected number of columns, there are three scenarios that can happen when trying to translate this file into an XML using the Translate to Native Format activity in BPEL:

1) More commas in one of the lines than the number of columns at the header level

With adding extra commas, there is no parsing error to be handled and the file is processed. However, it causes the element right after it to be null, and for the rest of the values to be phased off by one, causing the last element to contain two or more values.

For example, with one extra comma:

With two extra commas:

2) Less commas in one of the lines than the number of columns at the header level

This gives the following error while reading the file:

Gives following error while polling:

Error while translating.

Translation exception.

Error occured while translating content from file /ftp_read/ftp_folder1/raw_data.csv

Please make sure that the file content conforms to the schema. Make necessary changes to the file content or the schema.

3) The header (if required) is missing from the file or the column names in the header are in a different order than as expected in the schema.

Solution:

To prevent BPEL from mis-translating these files due to an error in generating these files (and handle these errors more gracefully), you can create an XQuery file that will validate that the header exists (according to a predefined NXSD schema) and check that the number of commas in all of the rows matches that of the header (first row). This has to be done in XQuery since Oracle's XSLT 2.0 implementation doesn't support the replace and tokenize functions used here.

To check that the header exists:

declare function local:header_in_file($sourceInput as element() (:: schema-element(ns1:validationXMLRequest) ::),

$fileHeader as xs:string) {

let $header:=$fileHeader

(: remove anything that isn't a comma or letters/numbers :)

let $expectedHeader:=replace($header,'[^,A-Za-z0-9]','')

(: remove the last comma that was added from the transformation earlier :)

let $expectedHeader:=substring($expectedHeader,1,string-length($expectedHeader) - 1)

(: get the raw data :)

let $data:=tokenize(fn:data($sourceInput/ns1:PayLoad), '\n\r?')

(: obtain the first line from the incoming file, which is supposed to be the header :)

let $headerInFile:= $data[1]

(: remove anything that isn't a comma or letters/numbers :)

let $headerInFile:=replace($headerInFile,'[^,A-Za-z0-9]','')

let $result:=compare($headerInFile, $expectedHeader)

where $result !=0

return

<Error>The header in the file does not match the expected header.</Error>

};

To check that the number of commas matches in all the rows:

declare function local:func($sourceInput as element() (:: schema-element(ns1:validationXMLRequest) ::)) as element()* (:: schema-element(ns2:validationReport) ::) {

let $rawdata:=fn:data($sourceInput/ns1:PayLoad)

(: remove everything except commas, quotations (eg. "LastName, FirstName"), and newline:)

let $replace:=replace($rawdata,'[^,"\n\r]','')

(: remove instances of commas inside quotation marks (e.g. "LastName,FirstName") :)

let $commasOnly:=replace($replace,'"[,]*"','')

let $tokenized:=tokenize($commasOnly, '\n\r?')

let $header:=$tokenized[1]

let $total:=count($tokenized)

for $row at $pos in $tokenized

let $rowCommas:=string-length($row)

let $headerCommas:=string-length($header)

let $result:=compare($header,$row)

(:skipping the header and the last array which is an empty line :)

where $pos != 1 and $pos!= $total and $result != 0

return

<Error>

{concat('Row #',$pos, ' does not have the same number of commas as defined in the header. Header commas: ',$headerCommas, ' Row commas: ',$rowCommas)}

<Error>

};

But how can you dynamically know the header of the file? Simple: using the NXSD schema, you populate a dummy variable based on the NXSD element:

You then manually transform using the following XSLT to a string variable (in this case, $header):

<?xml version="1.0" encoding="windows-1252" ?>

<xsl:stylesheet version="2.0" xmlns:xsl="http://www.w3.org/1999/XSL/Transform"

xmlns:tns="http://www.test.com/soa/nxsdschema">

<xsl:output method="text"/>

<xsl:template match="/">

<!-- replace the select elements with the corresponding elements in NXSD schema -->

<xsl:for-each select="//tns:ProbeCompletionDetails/tns:ProbeCompletionDetail/*">

<xsl:value-of select="local-name()"/>,

</xsl:for-each>

</xsl:template>

</xsl:stylesheet>

This outputs in text form the elements of the NXSD schema (which are based on the header) separated by a comma.

And then do an assign activity like the following so that it gets the result as a string:

<assign name="Transform">

<copy><from>ora:processXSLT('Transformations/TransformGetHeader.xsl',$dummyVariable)</from>

<to>$header</to>

</copy>

</assign>

Note: The assign activity HAS to be done using this function and not any other variation (e.g. doXSLTransformForDoc etc.) since otherwise the text output from the XSLT will NOT be properly handled! Also in 12c, with the new folder structure, it is NOT necessary to add the two periods to access the Transformations folder (e.g. ../Transformations/TransformationFile.xsl). Doing so will cause for the XSLT file to not be recognized.

Note: The assign activity HAS to be done using this function and not any other variation (e.g. doXSLTransformForDoc etc.) since otherwise the text output from the XSLT will NOT be properly handled! Also in 12c, with the new folder structure, it is NOT necessary to add the two periods to access the Transformations folder (e.g. ../Transformations/TransformationFile.xsl). Doing so will cause for the XSLT file to not be recognized.

This $header variable is the one that is passed as an argument to the header_in_file function.

And...you're done! You've successfully [dynamically] validated your CSV file before translating it to XML, thus preventing ugly errors/mistranslations from occurring.

Wednesday, December 14, 2016

OSB - Using DVM in 12c using qualifiers

To use a DVM in OSB 12c which requires qualifiers, specify the function as such:

dvm:lookupValue(dvm-location, src-column, src-value, target-column, default-value, qualifiers[])

dvm:lookupValue(dvm-location, src-column, src-value, target-column, default-value, qualifiers[])

dvm:lookupValue('DVMQualifiers/Proxy/dvm-cities', 'CityCode',

'KN', 'CityName', 'NA', ('Country','USA'))

Labels:

dvm,

oracle osb,

oracle service bus,

oracle soa,

osb,

osb 12c,

soa

Monday, August 8, 2016

SOA - Unable to edit file/jms/db adapter; shows red cross mark

Problem:

Migrated composites to the 12c version are unable to be edited, even with the <?binding.jca dbAdapter_db.jca> fix. The adapter is also showing a red cross mark.

Solution:

The solution is provided in this Oracle community post:

Migrated composites to the 12c version are unable to be edited, even with the <?binding.jca dbAdapter_db.jca> fix. The adapter is also showing a red cross mark.

Solution:

The solution is provided in this Oracle community post:

Open .jca file for JMS adapter and change from adapter="JMS Adapter" to adapter="jms" . If you use database adapter as well , then change from adapter="Database Adapter" to adapter="db". ( ... adapter="File Adapter" to adapter="file")

The adapter is now able to be edited normally.

Labels:

bpel,

database adapter,

db adapter,

file adapter,

jdeveloper,

oracle soa,

oracle soa 12c

Wednesday, July 27, 2016

FYI - JMS Polling and Error Queues

FYI:

When polling a JMS queue in OSB, in order for it to go to the configured error queue, an error must be raised. Raising an error and then handling this error won't cause the message to be sent to the error queue. If the error is handled, then a fault must be raised again.

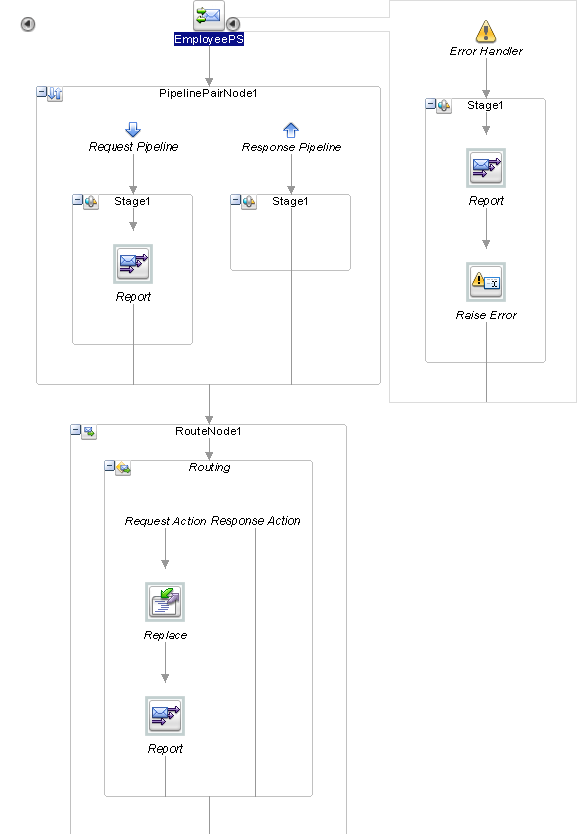

Take this simple proxy service as an example that polls from a JMS queue configured in weblogic and calls a stored procedure to insert the polled record:

EmployeePS Pipeline:

When polling a JMS queue in OSB, in order for it to go to the configured error queue, an error must be raised. Raising an error and then handling this error won't cause the message to be sent to the error queue. If the error is handled, then a fault must be raised again.

Take this simple proxy service as an example that polls from a JMS queue configured in weblogic and calls a stored procedure to insert the polled record:

EmployeePS Pipeline:

Should an unexpected error occur somewhere in this pipeline, it will be caught by the error handler. The error will be reported in the EM Message Reports but the error is raised again so that it can be sent to the error queue.

Doing a Reply with Failure will NOT send the message to the error queue. As explained by AbhishekJ here:

In case of JMS transport the JMS Queue or Topic will receive a NACK back but the message will NOT roll back and will be removed from the destination just like in case of Reply with Success. This happens because using a Reply action is considered as an exception being handled properly and a commit will be sent back to the JMS destination. To roll back the message ensure that you use Raise Error instead of a Reply action.

See also:

Transaction handling within OSB

Transaction handling within OSB

Labels:

fyi,

jms,

oracle service bus,

oracle soa,

oracle soa 12c,

osb,

osb 12c,

polling

Subscribe to:

Posts (Atom)